Mid-2024 AI dev tools temperature check

The 2024 Stack Overflow Developer Survey is out and there are interesting results for AI tools and how developers feel about them. 65k devs were surveyed over 30 days from May to June 2024. It was a pleasant surprise to see that the average time spent responding to the survey was 21 minutes! Yes, it's a long survey but it also signals very high engagement, giving me a lot of confidence in the responses.

How do devs feel about AI?

The survey had 11 questions about AI grouped into 3 categories:

Sentiment and usage

- Usage continues to increase, with a jump from 44% to 62% compared to 2023, still leaving a lot of room for adoption growth.

- Sentiment has gone down though, compared to 2023 with a dip of 5% from 77% to 72%.

- The question was "How favorable is your stance on using AI tools as part of your development workflow?"

- Those who responded "Indiferent" were quite high at 19%, indicating that 1/5 devs surveyed seem to be using AI tools due to a company or team mandate, rather than finding intrinsic value out of the tools.

Developer tools

- The top 3 benefits of using AI tools were:

- Increased productivity (81%) - Still, productivity continues to be a mythical concept that is extremely hard to measure, hinting that it is a subjective measure comparable to CSAT, which is good news with these high response markers.

- Faster learning (62%) - Predictably, faster learning ranked higher for devs who identified themselves as students or in the process of learning how to code

- Greater efficiency (59%) - A topic for a further post, I would like to explore the differences between perceived productivity vs. efficiency in coding.

- The accuracy of AI output continues to be questioned with only 43% of respondents feeling confident and 31% feeling skeptical.

- Almost a third of respondents (27%) neither trust nor distrust accuracy, a huge opportunity for AI dev tool companies to capture!

- The confidence in AI handling complex tasks is still very low with almost half of respondents (45%) believing they are not good enough.

- With 33% thinking AI is good but not great with complex tasks and 21% not sure if AI is good or bad, AI tools still have a long way to go in understanding complex environments, architectures, and setups.

- Looking forward, devs want to see more of the following workflows supported by AI dev tools:

- Generating content or synthetic data

- Documenting code

- Searching for answers

- Testing code

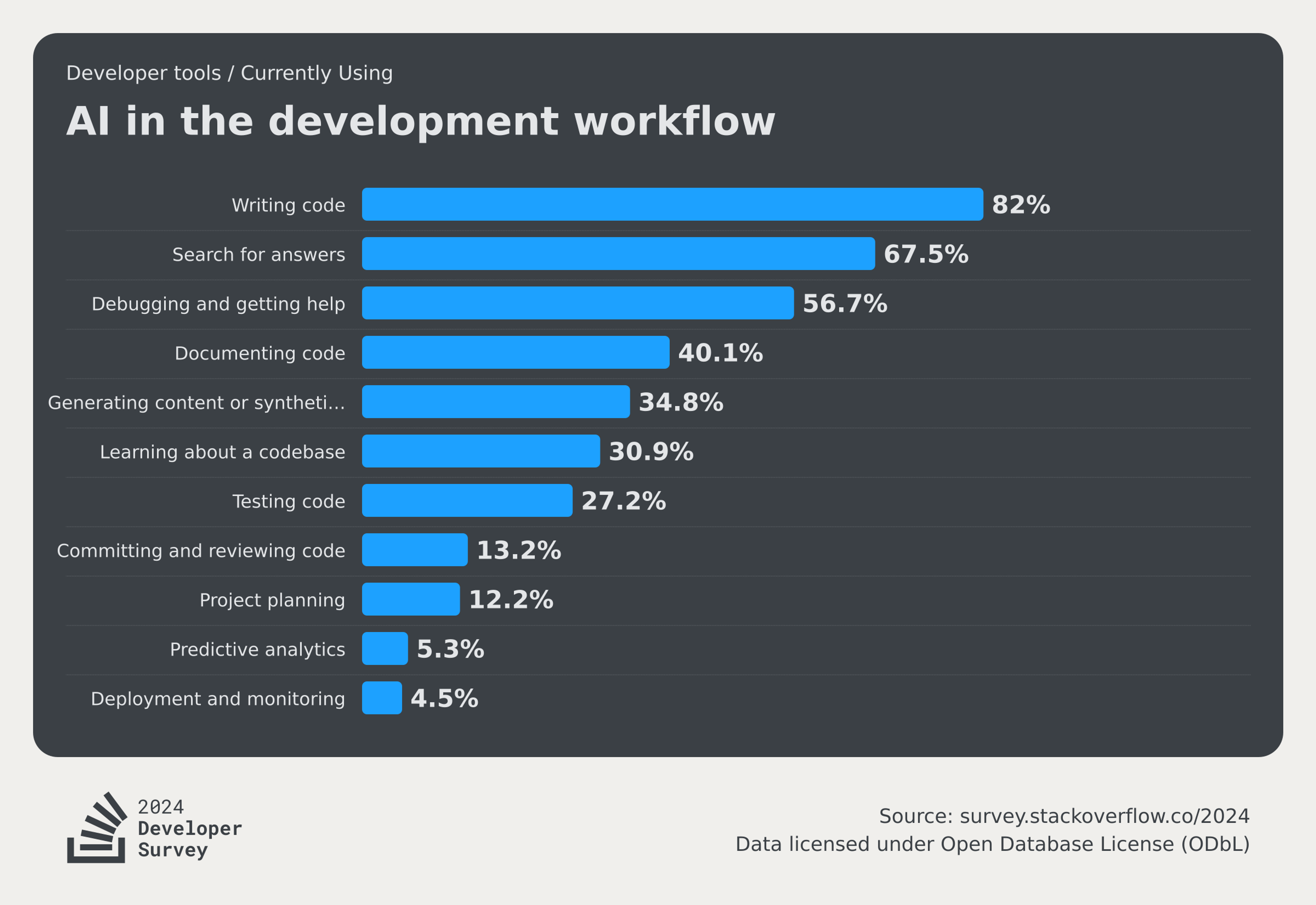

AI in the dev workflow.

Probably the most interesting question, producing a lot of interesting theories:

Devs currently using

- This is a great source for identifying dev use cases by popularity, especially for CSM or dev advocate teams looking to help developers succeed and make the most out of AI dev tools.

- AI tools seem to be delivering good results and being highly used for the most important parts of dev workflows:

- "Writing code" - most likely with autocomplete.

- "Search for answers" and "Debugging and getting help" - most likely using AI chat.

- Surprises in low-ranked use cases, hinting a low confidence in the quality of the output:

- "Documenting code" with 40% at #4.

- "Testing code with 27% at #7.

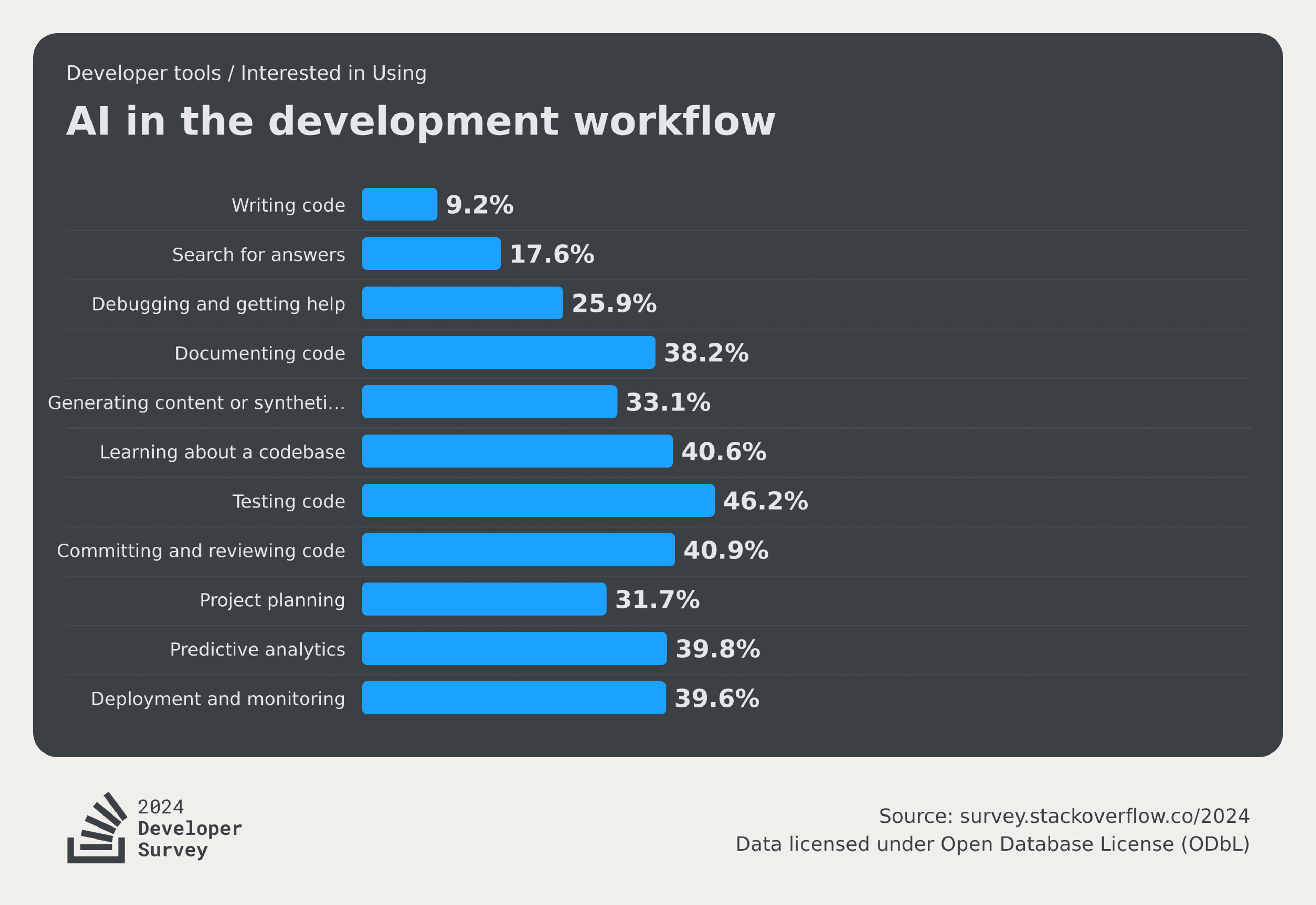

Interested in using

- Seeing "Testing in code" ranking as the top workflow for devs who are not currently using AI, is aligned with my experience working as a director of Technical Advisory at Sourcegraph, enabling Cody for enterprise prospects. This was one of our most requested workflows for enablement and to support additional product features.

- I'm surprised to see other workflows that ranked low for devs who are currently using AI dev tools such as "Committing and reviewing code", "Project planning", "Predictive analytics", and "Deployment and monitoring" ranked as high priorities for devs not using AI tools.

- These are not currently supported in the most popular Code AI tools such as Copilot, IntelliCode, and Gemini.

Efficacy and ethics

- Glad to see that most devs (68%) don't perceive AI tools as a threat. Interestingly, this number is much lower (58%) for devs who are learning to code. This hints that only devs who have hands-on trying the tools and are somewhat disappointed in the current quality and output, feel more reassured that AI is not a threat yet.

- Misinformation or disinformation is the top concern for devs (79%), closely followed by incorrect attribution (64%) and biased results (50%). This confirms that most devs still feel like we're in the wild west of AI output and that it still can't be fully trusted.

- This is reaffirmed by 66% of respondents saying they don't trust the output outright.

- Context - Still a key piece in how success and quality of responses are perceived; 63% of respondents claim the AI dev tools lack context about their codebase, environments, architecture, and setups.

- Lack of policies and training - About 1/3 of devs see this as a challenge to fully embrace AI dev tools.

Looking forward

Although the last question of the survey was grouped under ethics, Stack Overflow asked an open-ended question to devs in the form of "Please describe how you would expect your workflow to be different, if at all, in 1 year as a result of AI advancements". By grouping the most popular terms in the responses, it was interesting to see devs hoping for:

- Seamless, Time - Hinting that devs hope for better DX/UX and helping them reclaim more time from tasks with heavy toil.

- Well, code, Able code - Devs want to code well and better.

- Complex task - They want the AI tools they use to get better at understanding complex tasks and environments.

- Well, integration - More AI tools are starting to get more integrated with other context sources besides code. Code reviews, wikis, docs, specs, etc will give more context to AI tools and will hopefully produce better results.